The recent sting operation carried out by John Bohannan of Science focuses needed attention on the shadiest practices of academic publishing. As described in his great article, Bohannan submitted a faked cancer study, riddled with errors, to 314 open access journals. The sad outcome is that 157 of the journals accepted the paper for publication.

I was curious to find out whether Journal Impact Factors, a commonly used quantitative measure of a journal’s influence (and thus prestige), would predict whether the fake article would be accepted for publication or not. Lucky for me and anyone interested in this topic, Bohannan and Science have made a summary of their data available for download.

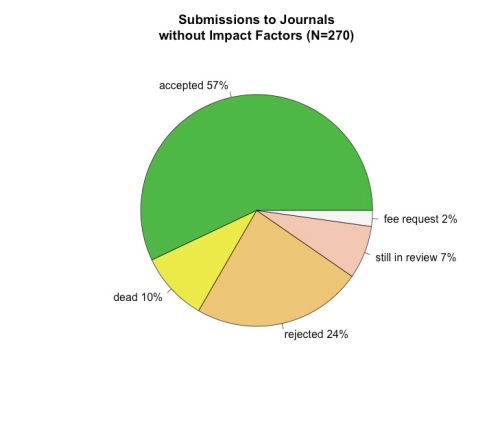

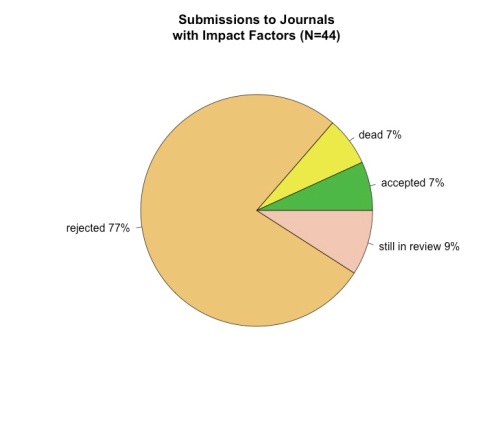

I cross-referenced the list of journals that received the spoof paper to the Thompson Reuters Journal Citations Reports (JCR) Science Edition 2012 database. I found that the great majority of the journals that received the article are not even listed or tracked in the JCR Science database: only 44 (14%) of the journals had an entry in the JCR database. While 57% of the journals without a listing in JCR Science accepted the paper, only 7% (n=3) of those in the database did so. The two pie-charts below illustrate the outcomes of submissions to journals listed and unlisted by the JCR Science database:

Since only 3 of the journals listed in the JCR Science database accepted the paper, it is difficult to make generalizations about the characteristics of such journals. Bohannan includes in his article excerpts of emails with the editor of one of these journals, Journal of International Medical Research, who takes “full responsibility” for the poor editing.

With the caveat that samples sizes are really small, I compared the 2012 impact factors of listed journals who accepted the paper (n=3) with those who rejected it (n=34). The median impact factor of accepting journals was 0.994 and those who rejected was 1.3075 (p <.05 in a two-tailed t-test).

The larger take away lesson, as I see it, is that journal impact factors are valuable, and are not just a guide to the likely influence of a paper, but also to the quality of the review process in place.

How do I sign up to get feeds from your blog, Brian?

Hi Iaian, is there not an RSS feed button on the site?

WHERE THE FAULT LIES

To show that the bogus-standards effect is specific to Open Access (OA) journals would of course require submitting also to subscription journals (perhaps equated for age and impact factor) to see what happens.

But it is likely that the outcome would still be a higher proportion of acceptances by the OA journals. The reason is simple: Fee-based OA publishing (fee-based “Gold OA”) is premature, as are plans by universities and research funders to pay its costs:

Funds are short and 80% of journals (including virtually all the top, “must-have” journals) are still subscription-based, thereby tying up the potential funds to pay for fee-based Gold OA. The asking price for Gold OA is still arbitrary and high. And there is very, very legitimate concern that paying to publish may inflate acceptance rates and lower quality standards (as the Science sting shows).

What is needed now is for universities and funders to mandate OA self-archiving (of authors’ final peer-reviewed drafts, immediately upon acceptance for publication) in their institutional OA repositories, free for all online (“Green OA”).

That will provide immediate OA. And if and when universal Green OA should go on to make subscriptions unsustainable (because users are satisfied with just the Green OA versions), that will in turn induce journals to cut costs (print edition, online edition), offload access-provision and archiving onto the global network of Green OA repositories, downsize to just providing the service of peer review alone, and convert to the Gold OA cost-recovery model. Meanwhile, the subscription cancellations will have released the funds to pay these residual service costs.

The natural way to charge for the service of peer review then will be on a “no-fault basis,” with the author’s institution or funder paying for each round of refereeing, regardless of outcome (acceptance, revision/re-refereeing, or rejection). This will minimize cost while protecting against inflated acceptance rates and decline in quality standards.

That post-Green, no-fault Gold will be Fair Gold. Today’s pre-Green (fee-based) Gold is Fool’s Gold.

None of this applies to no-fee Gold.

Obviously, as Peter Suber and others have correctly pointed out, none of this applies to the many Gold OA journals that are not fee-based (i.e., do not charge the author for publication, but continue to rely instead on subscriptions, subsidies, or voluntarism). Hence it is not fair to tar all Gold OA with that brush. Nor is it fair to assume — without testing it — that non-OA journals would have come out unscathed, if they had been included in the sting.

But the basic outcome is probably still solid: Fee-based Gold OA has provided an irresistible opportunity to create junk journals and dupe authors into feeding their publish-or-perish needs via pay-to-publish under the guise of fulfilling the growing clamour for OA:

Publishing in a reputable, established journal and self-archiving the refereed draft would have accomplished the very same purpose, while continuing to meet the peer-review quality standards for which the journal has a track record — and without paying an extra penny.

But the most important message is that OA is not identical with Gold OA (fee-based or not), and hence conclusions about peer-review standards of fee-based Gold OA journals are not conclusions about the peer-review standards of OA — which, with Green OA, are identical to those of non-OA.

For some peer-review stings of non-OA journals, see below:

Peters, D. P., & Ceci, S. J. (1982). Peer-review practices of psychological journals: The fate of published articles, submitted again. Behavioral and Brain Sciences, 5(2), 187-195.

Harnad, S. R. (Ed.). (1982). Peer commentary on peer review: A case study in scientific quality control (Vol. 5, No. 2). Cambridge University Press

Harnad, S. (1998/2000/2004) The invisible hand of peer review. Nature [online] (5 Nov. 1998), Exploit Interactive 5 (2000): and in Shatz, B. (2004) (ed.) Peer Review: A Critical Inquiry. Rowland & Littlefield. Pp. 235-242.

Harnad, S. (2010) No-Fault Peer Review Charges: The Price of Selectivity Need Not Be Access Denied or Delayed. D-Lib Magazine 16 (7/8).

This would be an interesting exercise if there hadn’t been selection bias and no control group.

There is plenty of evidence that IFs do not predict any aspect of quality in the articles published (other than hi-IF publications being less reliable):

http://www.frontiersin.org/Human_Neuroscience/10.3389/fnhum.2013.00291/full

so any evidence to the contrary (as your analysis might suggest) would be very interesting, if it were properly collected.

Thanks for the link to your work and others’ focusing on the negative consequences of journal ranking systems. The work of Fang et al. (2012) is quite interesting, and shows there is a statistically significant (but quite weak) effect of journal rank on the number of retractions issued for all causes. Here are their exact results: “Journal-impact factor showed a highly significant correlation with the number of retractions for fraud or suspected fraud (A) (n = 889 articles in 324 journals, R2 = 0.08664, P < 0.0001) and error (B) (n = 437 articles in 218 journals, R2 = 0.1142, P < 0.0001), and a slight correlation with the number of retractions for plagiarism or duplicate publication (C) (n = 490 articles in 357 journals, R2 = 0.01420, P = 0.0243)." These are intriguing results. However your concern for lack of "control groups" might also be a valid critique of these results. There is every reason to believe that articles published in high-impact journals receive greater reads and scrutiny from peers, and thus errors or even evidence of fraud is more likely to be detected in publications in high impact journals, all things equal. What would be great, for instance, would be to analyze two random samples of papers from high-impact and low-or-no-impact journals and simply scan them for obvious errors (assessing fraud of course would be much trickier). Are you aware of any such studies?

Fang, F. C., Steen, R. G., and Casadevall, A. (2012). Misconduct accounts for the majority of retracted scientific publications. Proc. Natl. Acad. Sci. U.S.A. 109, 17028–17033. doi: 10.1073/pnas.1212247109

There are similar studies which we cite in our paper. IIRC, we cite four them them (they study basic methodology). Some find a small effect (positive), others not. In summary, we conclude that methodology appears not to be correlated with journal rank in any practically meaningful way, but further study is required.

There is more data in our review suggesting that article ‘quality’ is not better, but perhaps worse in hi-ranking journals, supporting the retraction data.

We also discuss significant visibility effects exacerbating the quality issues in hi-ranking journals by increasing th error-detection rate on top of shoddy science.

Pingback: Science Mag sting of OA journals: is it about Open Access or about peer review? | I&M / I&O 2.0

What happens to those t-test values if you combine the Impact Factors of “still in review” with the “accepted”? “Still in review” would seem to indicate an unreasonably long delay, and thus be a failure of peer review (although a different kind).

Brian wrote: “There is every reason to believe that articles published in high-impact journals receive greater reads and scrutiny from peers, and thus errors or even evidence of fraud is more likely to be detected in publications in high impact journals, all things equal.”

First, journals with the highest Impact Factor typically boast of “high quality peer review”, implying that they have the most stringent review process that is least likely to publish problematic papers in the first place.

Second, most retractions are for misconduct (paper by Fang and colleagues that you cite), so it seems strange that people who engage in misconduct would pursue publication in the venue most likely to result in them getting caught. This speaks to how distorted the incentive structure is.

Also: ping!

http://neurodojo.blogspot.com/2013/10/journal-sting-black-eye-for-thomson.html